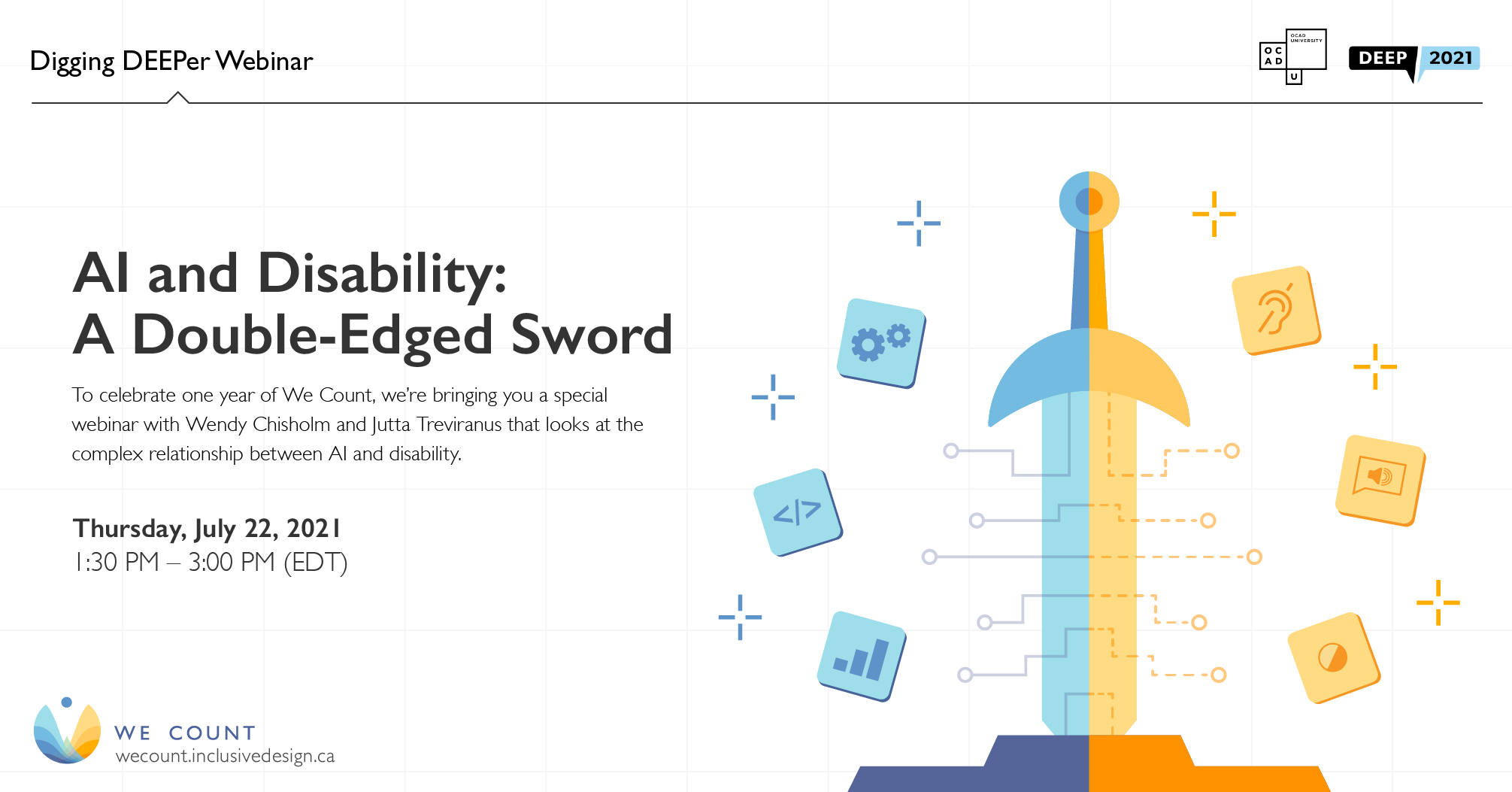

AI and Disability: A Double-Edged Sword

AI and Disability: A Double-Edged Sword

To celebrate the anniversary of the We Count Digging Deeper Series, we’re bringing you a special webinar that features an intimate conversation between Wendy Chisholm and Jutta Treviranus about the complex relationship between innovative technology and disability, with a focus on artificial intelligence (AI). Drawing on their wealth of knowledge and experience, these panelists will discuss the risks and rewards of AI for people with disabilities, as well as what the future of the industry may hold for the disability community.

July 22, 2021, 1:30 PM – 3:00 PM (EDT)

AI and Disability webinar video

Panelists:

Wendy Chisholm has been working to make the world more equitable since 1995, when she started working on what would become the W3C’s Web Content Accessibility Guidelines, the basis of worldwide web accessibility policy. Since then, she co-wrote Universal Design for Web Applications with Matt May (O’Reilly, 2008), worked as a consultant, and appeared as Wonder Woman in a web comic with the other HTML5 Super Friends. In 2010, she joined Microsoft to infuse accessibility into their engineering processes and drove the development of what is now Accessibility Insights. As of 2018, she manages the selection and funding of projects for Microsoft’s AI for Accessibility program — a $25 million grant program to accelerate AI innovations that are developed with or by people with disabilities.

Jutta Treviranus is the Director of the Inclusive Design Research Centre (IDRC) and professor in the faculty of Design at OCAD University. Jutta established the IDRC in 1993 as the nexus of a growing global community that proactively works to ensure that our digitally transformed and globally connected society is designed inclusively. She also heads the Inclusive Design Institute, a multi-university regional centre of expertise. Jutta founded an innovative graduate program in inclusive design at OCAD University. She leads international multi-partner research networks that have created broadly implemented innovations that support digital equity. She has played a leading role in developing accessibility legislation, standards and specifications internationally (including W3C WAI ATAG, IMS AccessForAll, ISO 24751, and AODA Information and Communication). She serves on many advisory bodies globally to provide expertise in equitable policy design. Jutta’s work has been attributed as the impetus for the corporate adoption of more inclusive practices in large enterprise companies such as Microsoft and Adobe.

Earn a Learner badge

You will learn:

- The risks and rewards of AI for people with disabilities

- How future developments in different industries may impact the disability community

Learn and earn badges from this event:

- Watch the accessible AI and Disability: A Double-Edged Sword webinar

- Apply for your Learner badge